.png?command_1=resize&width_1=220)

This morning, we hosted the second session in our three-part parent workshop series on Artificial Intelligence (AI) Literacy in Education. With one session held in person and another online, families had multiple opportunities to deepen their understanding of how AI is shaping both education and the wider world in which our children are growing up.

Parents were invited to share how they currently use AI at home by writing their examples on post-it notes. Table groups then discussed potential risks or safety issues connected to these tools, setting the stage for a thoughtful and engaging conversation.

To lighten the mood while still highlighting the complexity of AI, we watched a humorous Simpsons clip that illustrated how multifaceted and unpredictable AI systems can be.

Exploring Parent Perspectives on AI Safety

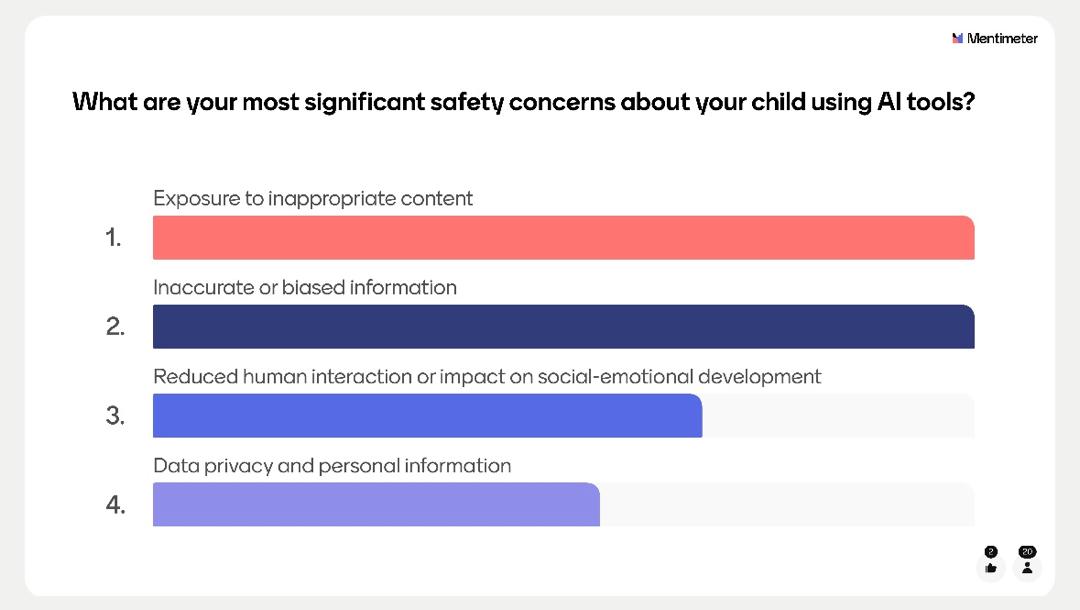

Using a live Mentimeter poll, we gathered feedback from parents on several important questions about AI safety:

- How concerned are you about your child’s safety when using AI tools?

Most parents expressed higher levels of concern.

- Where do you feel children are most at risk when using AI?

The majority selected “equally at both school and home,” with some choosing “at home.”

- What are your most significant safety concerns?

Parents shared a wide range of thoughtful responses.

- Do you feel your child understands how to use AI safely and responsibly?

Most parents selected “not really” or “not sure.”

- How confident do you feel guiding your child’s AI use?

The majority indicated they felt somewhat confident.

We then discussed why AI safety matters, exploring how rapidly changing technology requires new skills and awareness for both students and adults.

Activity: Can You Tell What’s Real?

.png)

Parents participated in an activity using whichfaceisreal.com, a tool that presents two faces, one real and one generated by AI, and challenges users to choose the authentic image. This sparked discussion about AI-generated misinformation and the risks associated with deepfakes, including:

- Fake content can look convincingly real

- Students may unknowingly share false information

- Misinformation can affect learning and research

- AI tools may expose students to inappropriate content

- Deepfakes can be misused to bully, embarrass, or manipulate

Scenario Discussions: Social and Emotional Impacts

Parents then explored a collection of real-world scenarios illustrating how AI intersects with social and emotional wellbeing. Discussion topics included:

- AI friendships: The risk of relying on AI companions and withdrawing from human relationships

- AI as therapy: The lack of accountability, training, and follow-up when children use AI tools for emotional or mental-health support

These conversations helped parents reflect on how important it is to guide children toward balanced, healthy relationships.

Data and Privacy: A Crucial Concern

We also introduced the topic of data and privacy protection, an essential component of AI literacy. Key concerns include:

- Collection of sensitive student data

- Data security, storage, and potential sharing

- Bias, equity, and the influence of algorithms on decision-making

We will explore these issues more deeply during next week’s session on AI Ethics in Education.

Key Takeaways: Staying Safe When Using AI

We concluded with some simple but important reminders for supporting safe and responsible AI use at home:

- Protect personal information

- Think critically before accepting information

- Treat AI as a tool, not a friend

- Use AI appropriately

- Adjust privacy settings regularly

- Log out when finished

#ISUParentBlogs #ISUParentForum

.png)

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png)