.png?command_1=resize&width_1=220)

This week’s Parent Forum, AI Ethics in Education, sparked thoughtful conversation, honest questions, and a sense of shared responsibility as we navigate a rapidly changing technological landscape together.

We began with an ethical dilemma from the Moral Machine website: What should a self-driving car do when faced with two bad options? This was a strong reminder AI ethics has real world consequences.

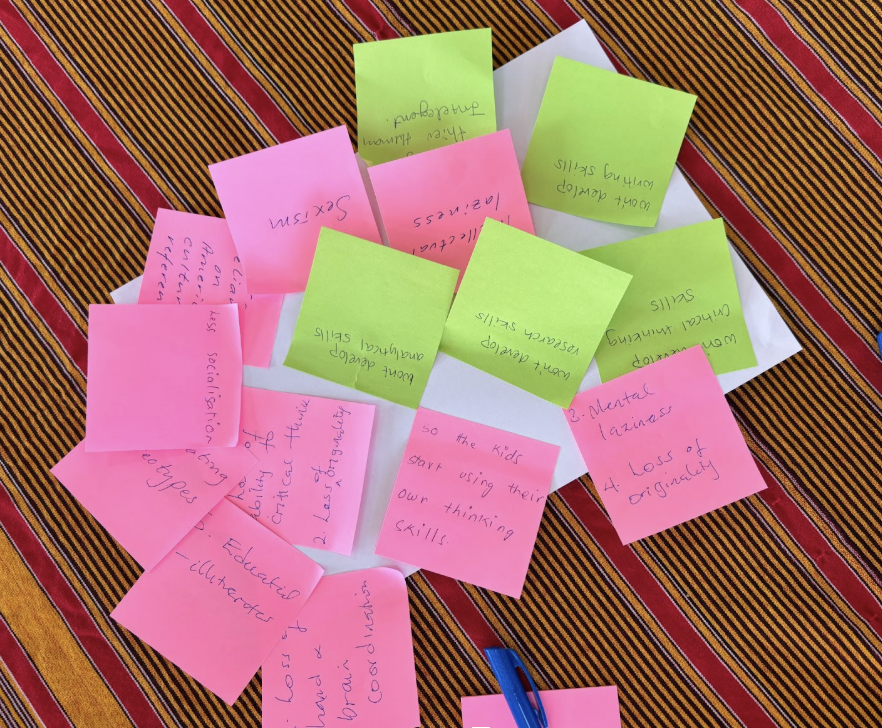

We posed the question - why is AI ethics important for our students? Parents wrote their concerns on post-its and discussed them in groups. Several common themes emerged:

- Over-reliance on AI

- Intellectual laziness or reduced critical thinking

- Struggle to find the right balance between efficiency and dependence

- A sense of unfairness or unequal use among students

- Misinformation and inaccurate AI-generated content

These insights reinforced that families and educators are grappling with the same big questions.

We watched a short video that emphasised that AI isn’t neutral. It illustrated how AI learns from data sets online and these reflect the people and power structures behind the data selection. AI is only as good as the examples it learns from and can pick up harmful patterns that repeat and amplify bias.

A study from Bloomberg technology had image generating AI create thousands of jobs. The models were biased based on gender and skin tone. Lower paying jobs were represented by darker skin tones, and higher paying jobs were most often portrayed by light-skinned men.

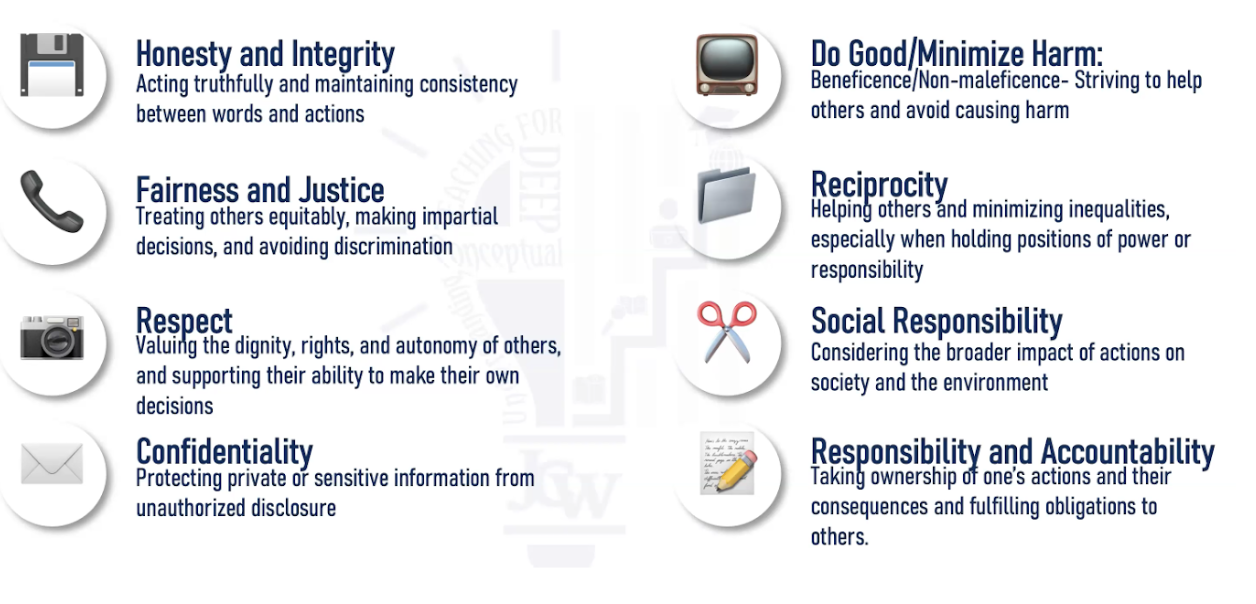

- AI can reinforce stereotypes based on the data it’s trained on (e.g. gender, race, culture).

- Students must learn to question AI outputs and recognise when content may be unfair or inaccurate.

- Ethical AI education includes understanding where bias comes from and how to challenge it.

There followed a great discussion generated by various participant questions about how to tackle bias in AI.

- How do younger students learn to recognise bias?

By grounding lessons in broader concepts—bias, stereotypes, fairness—and connecting them to AI. - Can prompting help reduce bias?

Yes. Adding terms such as “culturally diverse,” “human-centred,” or “inclusive” can shape more balanced outputs. - Where does bias become discrimination?

When AI recommendations influence decisions that impact people. This is why human judgment must remain central.

We also recognised that many of these concerns—bias, fairness, integrity—aren’t new. AI has simply amplified them, making the need for education even more urgent.

Academic Integrity and AI

We went on to explore Academic Integrity in the context of AI. Some questions to consider included

- Who is the author of AI-assisted work?

- How do we ensure learning remains authentic?

- How do we balance support with accountability?

Our school’s approach to Academic Integrity includes the following:

- Strong Relationships with Students: Teachers know their students’ voices, thinking, and habits. This makes it easier to recognise when work reflects—or doesn’t reflect—authentic learning.

- Varied and Purposeful Assessment: We intentionally combine timed tasks, in-class activities, oral reflections, and process journals. These formats prioritise understanding over polished output.

- Clear Expectations and Transparency: Students are told when AI may or may not be used, and are encouraged to disclose how they used AI. Academic integrity requires honesty, reflection, and responsibility—values at the core of the IB.

- AI Detection Tools: Tools like Turnitin can help identify unoriginal or AI-generated content, but these tools are used as an extra layer of the approach. Our priority remains to educate our learners in AI literacy so that they are empowered to make the right choices.

Senior School students are required to sign our Responsible Use Policy and adhere to our AI Student Handbook. Key expectations include:

Ethical AI Use:

- Students will refrain from using AI to mislead, discriminate, mock, or harm others.

- Students will actively consider the impact of AI on themselves, their peers, the broader community and the environment.

Academic Integrity:

- Students will use AI in alignment with the school’s academic integrity policies, ensuring AI use is disclosed, appropriately cited and used ethically.

One area sometimes not considered is the environmental footprint of AI. Large AI models require significant energy and resources. Ethical use therefore includes:

- Choosing when AI is necessary (and when it isn’t)

- Discussing digital consumption and sustainability with students

- Recognising that “just because it can” doesn’t mean we should

Building Strong AI Literacy

Ultimately, our goal is clear: prepare students for a world being transformed by AI. AI literacy—understanding how AI works, its limitations, its risks, and its ethical considerations—brings together all the themes explored in our forum. While some schools are choosing to limit access to keep children safe, we choose to educate our children within the context of our values so that they are equipped to make informed and principled decisions.

With strong AI literacy, students learn to:

- Think critically

- Make ethical choices

- Use AI responsibly

Protect themselves and others - Understand the impacts of technology on society and the environment

In the interests of ethical transparency, we used ChatGPT to help us organise our notes into this blog!

If you are in Kampala next week, join us at the Lecture Series! Read on, for registration details:

.png)

#ISUParentBlogs #ISUParentForum

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png&command_2=resize&height_2=85)

.png)